Improving Fairness and Reducing Bias for AI/ML

Machine learning (ML) approaches have been broadly applied to the prediction of patient risks. ML may also reduce societal health burdens, assist in health resources planning and improve health outcomes. However, the fairness of these ML models across ethno-racial or socioeconomic subgroups is less assessed or discussed. There is unmet need in investigating the overlap between under-served subpopulations and under-/over- diagnosed patients to quantify and interpret the algorithmic biases of ML models.

Fairness has various definitions in different domains, in healthcare, specifically, fairness addresses whether an algorithm treats subpopulations equitably. The issue of fairness has recently attracted more attention, as ML-driven decision support systems are increasingly applied to practical applications. Algorithmic unfairness, or bias, in healthcare may introduce or exaggerate health disparities. Failure in accounting for sex/gender differences between individuals will lead to suboptimal results and discriminatory outcomes. We seek to build solutions to exposing and mitigating biases in ML by identifying potential biases at multiple stages of study designs and suggest that researchers apply strategies to reduce the risk of bias. We have proposed a series of novel methods that integrate Social Determinants of Health (SDOH) variables to the clinical predictive model in order to improve the fairness of ML models.

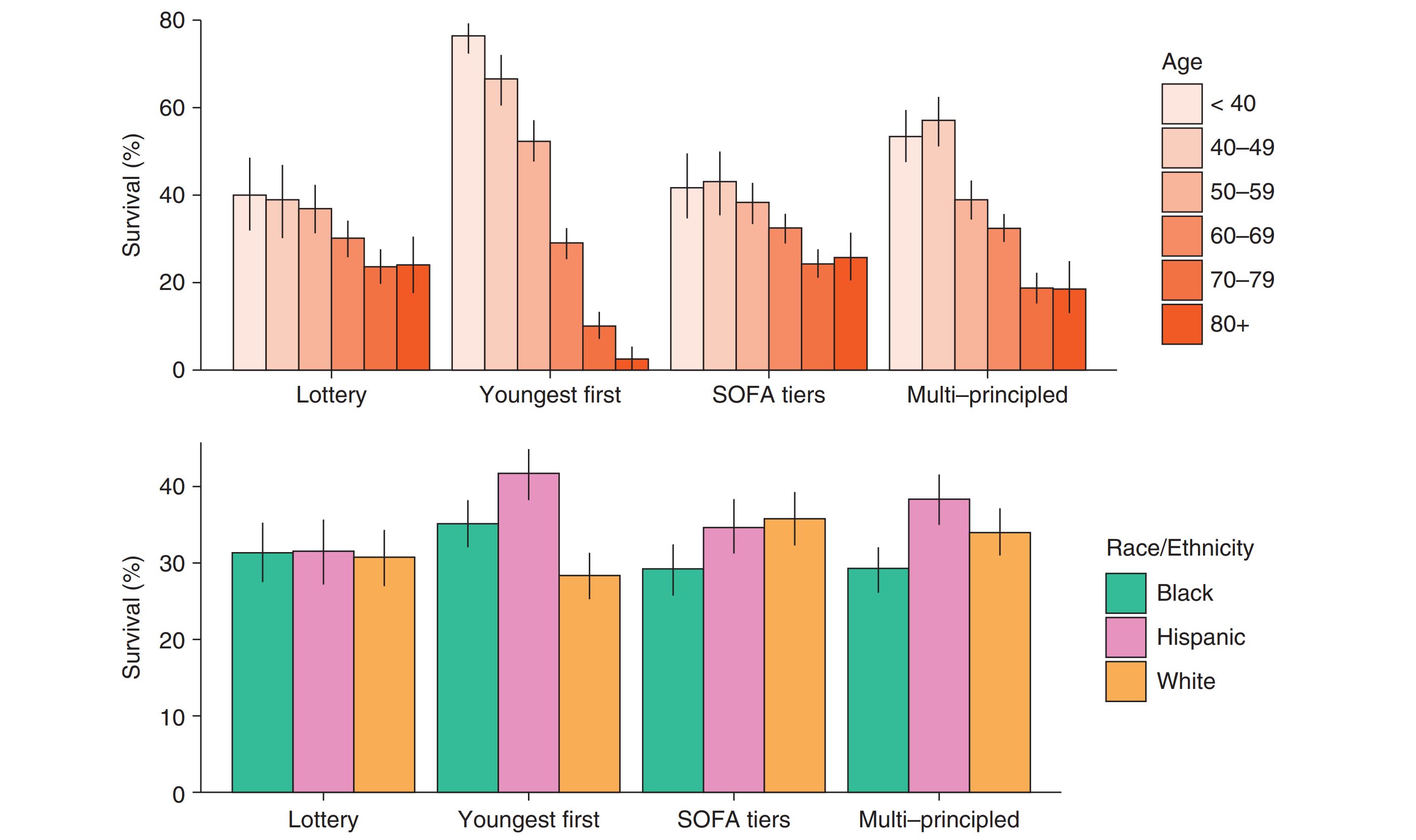

For example, in our AJRCCM paper titled "Simulation of Ventilator Allocation in Critically Ill Patients with COVID-19" (link on the right), we used Monte Carlo simulation to evaluate pragmatic ventilator allocation systems on critically ill adult patients with laboratory-confirmed COVID-19 who required mechanical ventilation at three healthcare settings in the greater Chicagoland area between March 2020 and February 2021. We found that Black patients had higher SOFA scores and higher prevalence of comorbidities leading to lower priority tiers and significantly less allocation of ventilators and thus significantly lower survival (see Figure below). While unintended, this disparity highlights the potential of “color-blind” protocols to exacerbate health disparities. In their current form, most US ventilator allocation protocols would likely amplify existing healthcare disparities, layering inequitable resource allocation onto the current disproportionate health impact of the pandemic on disadvantaged communities.

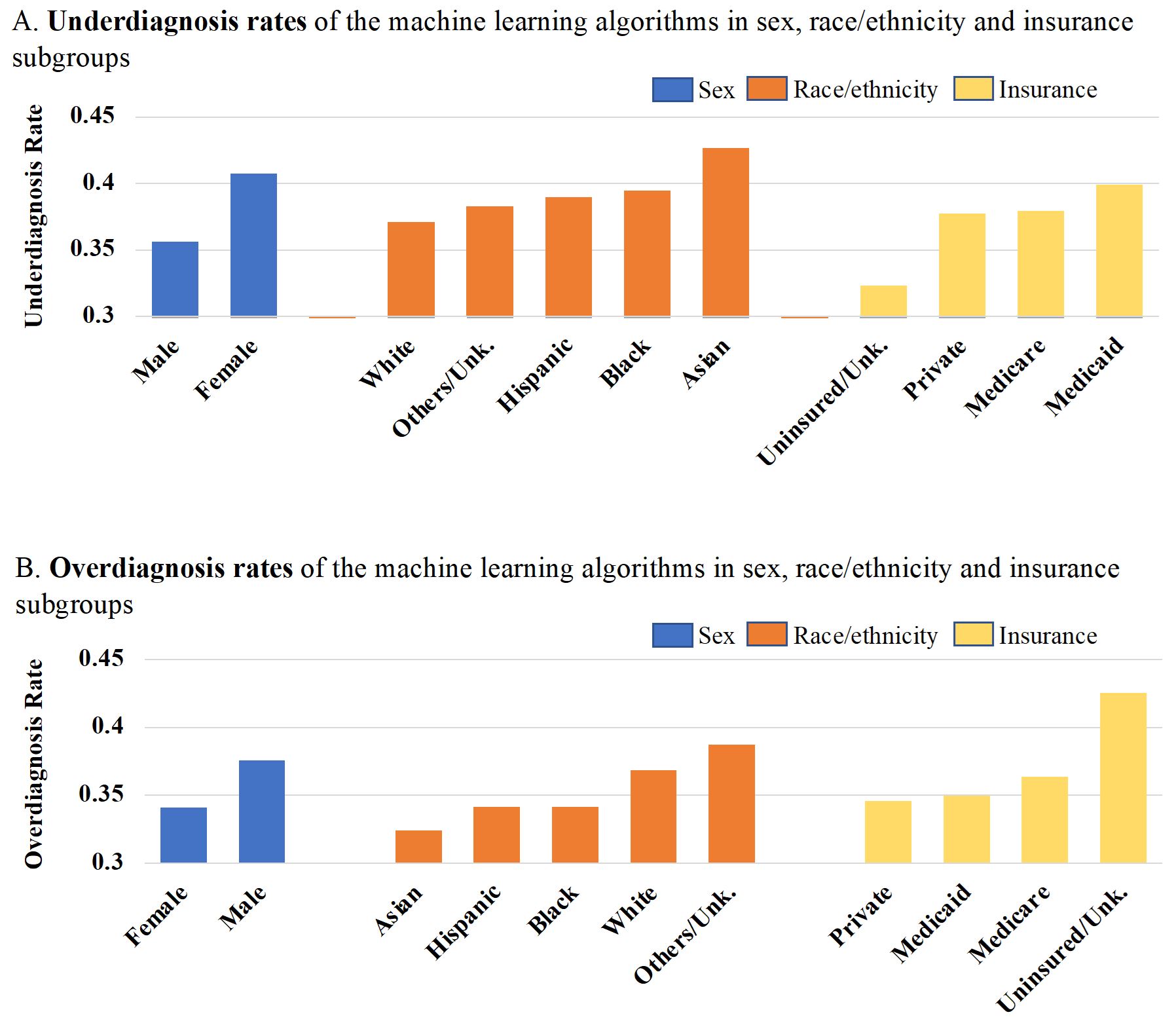

In our Circulation: Heart Failure paper titled "Improving Fairness in the Prediction of Heart Failure Length-of-Stay and Mortality by Integrating Social Determinants of Health" (link on the right), we observed significant differences in underdiagnosis (false negative rate) and overdiagnosis (false positive rate) rates in each sex, ethnoracial, and insurance subgroup, when using random forest classifier to predict the composite heart failure outcome (see Figure below). We integrated the community-level social determinants of health to the feature space of individuals in order to improve the performance and fairness of ML based Heart Failure predictive models.

Select Publications

- Using Machine Learning to Integrate Sociobehavioral Factors in Predicting Cardiovascular-Related Mortality Risk. Studies in health technology and informatics 2019

- Simulation of Ventilator Allocation in Critically Ill Patients with COVID-19. Am J Respir Crit Care Med (AJRCCM) 2021

- Improving Fairness in the Prediction of Heart Failure Length-of-Stay and Mortality by Integrating Social Determinants of Health. Circulation: Heart Failure 2022 accepted [preprint]

- Characterizing Healthcare Costs in the United States: Inequities and Poor Outcomes. Under Review [preprint]